A few weeks ago, the Department for Education announced the Strengthening Families, Protecting Children programme. This is an £84 million investment by the department in taking three models of social work practice, tested as part of the first round of the Innovation Programme – those of Leeds, Hertfordshire and North Yorkshire – and supporting them to be implemented over the next several years to 20 local authorities around England.

The What Works Centre is delighted to describe our involvement in this project and our role as evaluators. Each of the three models was, of course, evaluated as a part of the Innovation Programme, and the reports for Leeds, North Yorkshire and Hertfordshire make interesting reading, all finding evidence of promise. These evaluations, as well as the continued strong performance of all three local authorities, has prompted the interest in scaling up the approaches.

The goal of this evaluation is therefore a bit different – we want to find out the impacts of the roll out on outcomes for young people, their families, and social workers in the local authorities that are receiving the new models.

The ‘gold standard’ way of doing this kind of thing is with a randomised controlled trial (RCT) in which families, or perhaps social workers, receive either the business as usual model, or the new one, in a way that isn’t biased, and where it’s chosen by the flip of a coin rather than by someone’s judgement. That’s clearly not appropriate here, where each of the three models requires whole system change, and buy-in from across children’s services – something that wouldn’t be possible if some social workers were still doing things the old way. This is not just a problem for colleagues trying to make the programme work, but for us as evaluators – we can’t make major changes to a programme, just to incorporate the evaluation of that programme.

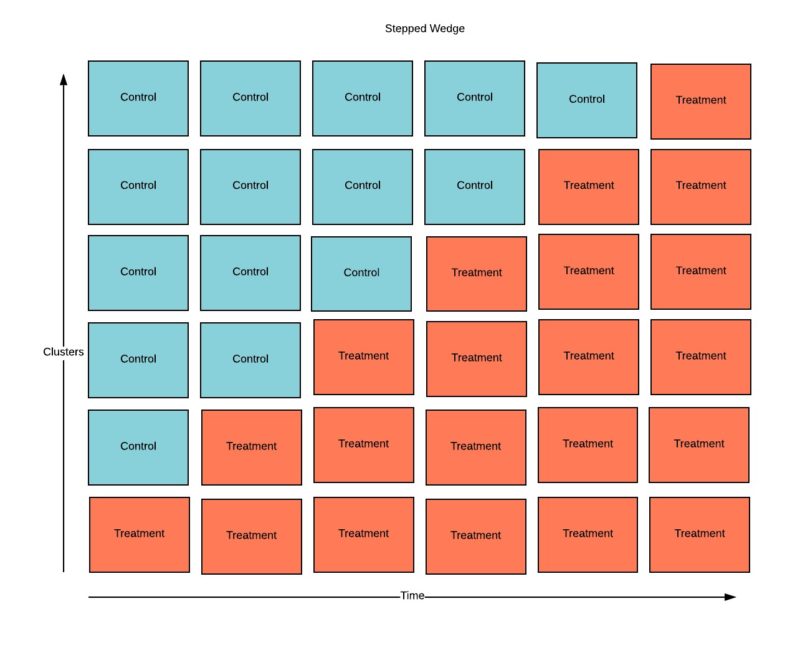

That’s why we’ve been working for the last five months with Leeds, Hertfordshire and North Yorkshire, as well as officials from the DfE, to develop a pragmatic alternative to an RCT which lets us maximise what we learn from the evaluation, while not getting in the way of delivery. What we’ve settled on is a “pragmatic stepped wedge” trial – one very much shaped by our LA partners – in which the order the 20 partner authorities receive the new model will be randomly selected – so one might start in September, another six months later, and so on, like in the diagram below. This isn’t as ‘pure’ as an RCT, but it better reflects the design of the programme itself.

This is the largest evaluation of its type ever conducted in children’s social care, anywhere in the world as far as we’re aware, and it’s something that we’re very excited to be a part of. As well as letting us bring new approaches to bear, the scale of the project should also let us answer questions not just about the effects of each model, but where and when each one works best. As Eileen Munro says – you can’t grow roses in concrete, and in the spirit of gardeners’ question time, these three evaluations should help us learn under what conditions different sorts of rose are most likely to thrive, and to help individual authorities, as well as the DfE, work out which models to scale where in the future.